NISAR: An all-seeing eye on Earth

The assembling of NISAR satellite at ISRO, Ahmedabad

From the ‘clean rooms’ and high-security halls of ISRO, in Bengaluru, comes a satellite unlike any built before. The NASA-ISRO Synthetic Aperture Radar (NISAR) mission not only marks the most ambitious collaboration between India and the US in space, but also sets a new gold standard for Earth observation.

What makes NISAR a trailblazer? Its core is a unique marriage of two radar systems, each with its own “superpower”.

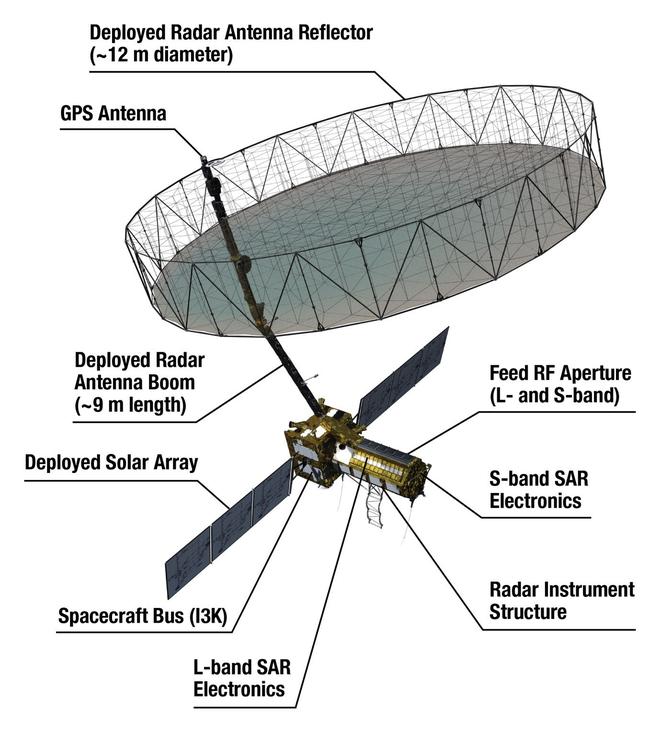

Dual-frequency innovation is the heart of NISAR. L-band radar (provided by NASA), with a wavelength of 24 cm, effortlessly penetrates dense forests, sees the skeleton of landscapes, and monitors changes in vegetation and topography — even through thick cloud cover. S-band radar (engineered by ISRO), at 10 cm wavelength, excels at recording subtle changes in soil, wetland, and ice, even in challenging equatorial and polar environments.

This dual-frequency setup is a first not just for India, but also globally, for any free-flying space observatory, enabling simultaneous imaging from both bands to reveal what single-frequency satellites cannot.

Bold engineering

ISRO’s imprint on NISAR is unmistakable. Indian engineers took on the formidable challenge of designing, fabricating, and testing the S-band SAR unit and the satellite’s core bus.

NISAR

Their achievements include the S-band SAR payload, which is responsible for capturing the high-resolution images that are critical in tracking natural disasters, including floods, landslides, and coastline changes.

The ‘chassis’ supporting the payloads, also built by ISRO, integrates sophisticated power, control, and thermal systems to keep NISAR’s sensitive electronics safe in the harsh conditions of space.

Launching from Sriharikota, using ISRO’s trusted geosynchronous satellite launch vehicle (GSLV), NISAR is a testament to India’s prowess in heavy satellite deployment.

Indian teams at the UR Rao Satellite Centre, in Bengaluru, conducted precision integration, testing the massive radar payload and reflector at ISRO’s state-of-the-art antenna facilities, simulating harsh space conditions and verifying interoperability.

Central to NISAR’s uniqueness is its SweepSAR digital beam forming architecture

Unlike traditional radars that scan side by side, SweepSAR’s ‘scan-on-receive’ technique covers an astonishing swath — over 240 km wide — while maintaining fine resolution.

The 12-m deployable mesh reflector, among the world’s largest, unfolds in orbit to catch the faint returning radar echoes with surgical precision, even as the reflector rotates and the spacecraft whizzes overhead. Each of the hundreds of feed elements in the antenna can be directed and processed independently, making NISAR an agile and dynamic observer, not a passive scanner.

Giant leap

NISAR is the result of a decade of technical exchanges between ISRO and NASA, involving hardware, know-how, and joint mission operations.

NISAR assembly and antenna testing at ISRO, Bengaluru

Mapping the entire globe every 12 days, NISAR will measure everything from tree heights and crop yields to glacier cracks and earthquake-triggered landslides, providing daily insights into a warming, shifting Earth. Real-time, freely available data will help planners predict floods, monitor water resources, and react to disasters, potentially saving lives in India and across the globe. All data is accessible to researchers worldwide within days, and even faster in emergencies.

The project embodies a commitment to global science and transparency.

A feather in ISRO’s cap, NISAR has deep roots in Indian soil. From the assembly lines in Bengaluru to outreach events in Gujarat Science City, Indian scientists and engineers are at the core of this international effort. The satellite will not just survey distant regions but also provide critical data for India’s monsoon management, forest conservation, and river basin planning — delivering local as well as global impact.

As NISAR prepares for launch, ISRO’s contribution — and India’s growing technological might — have never been more visible or celebrated.

This is not just a mission. It is a symbol of collaboration, innovation, and the power of vision to make the invisible visible.

(The writer is a former associate project director of NISAR at ISRO)

More Like This

Published on July 28, 2025