There is a growing body of scientific literature that thinks that ‘ionic liquids’ (IL) might just be the solution (pun intended) to the problem of extracting valuable metals from used batteries. ILs, sometimes colourfully described as ‘designer solvents’, could be the battery recycler’s dream-come-true.

That ‘battery recycling’ is an emerging, growing industry is not in doubt. A November 2023 report of Avendus Capital noted that the demand for lithium-ion batteries would touch 235 GWhr by 2030; the recycling industry would grow in sympathy, to 23 GWhr, worth $1 billion. Since batteries account for not less than 30 per cent of the cost of an electric vehicle, extracting metals such as lithium, nickel, cobalt and manganese from used batteries is useful.

While there are many ways of mining battery waste, the one that is commonly used is ‘hydrometallurgy’ — essentially ‘dissolve and separate’ which sometimes uses harmful chemicals. Now scientists are saying that ionic liquids, known for a century, could find a new purpose in extracting useful metals from used batteries.

What are ionic liquids?

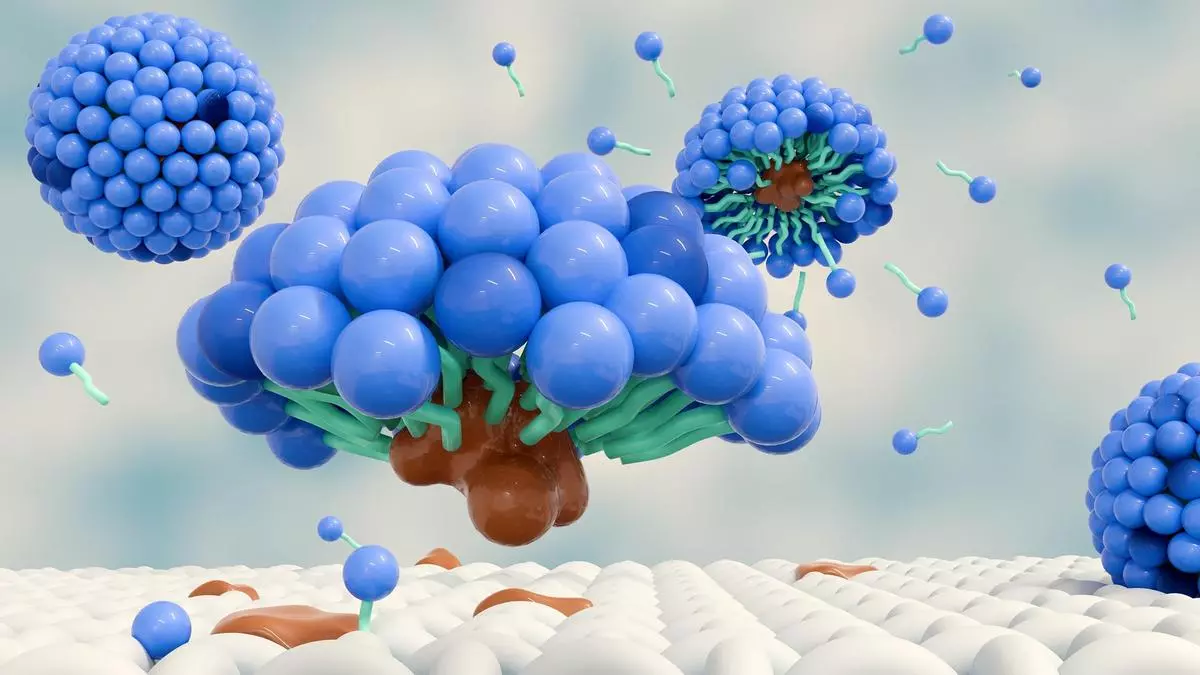

Liquids are typically composed of electrically neutral molecules. In contrast, ionic liquids (IL) are made entirely of ions — positively charged cations and negatively charged anions.

Usually, cations and anions should cling together to form neutral molecules. But in ILs they don’t, because of the asymmetry of cations and anions. ILs are essentially salts that are liquid at temperatures below 100 degrees. Typically, salts are solids at such temperatures and require a large amount of heat to melt. Ionic liquids are highly adaptable, non-volatile liquid salts with a wide range of industrial and scientific applications. Their unique properties, such as low melting points and tunability, make them valuable in areas like green chemistry, electrochemistry and materials science. By selecting different cations and anions, the physical and chemical properties of ionic liquids — such as viscosity, density, solubility and conductivity — can be precisely tailored for specific applications.

In other words, you can create your own IL for a specific use, by picking up cations and anions off-the-shelf. Such ILs are called ‘task-specific ionic liquids’ (TSILs). By carefully selecting a combination of cations and anions to create a salt with desired properties. Horses for courses, you can design ILs for extracting a certain metal.

ILs are environment-friendly and can dissolve a wide range of substances — organic, inorganic and polymeric. “Creating new cations and anions, and incorporating suitable functional groups can impart the exact physical properties essential for each application at the core of the ILs designing process,” says a review study conducted by a group of scientists from CSIR and IIT-Madras. “With appropriate design, ionic liquids can exhibit advantages such as low volatility, high stability, a wide liquid range, high conductivity and high solubility,” the study says.

“Due to its heterogeneous composition, discarded rechargeable batteries (LIBs, NiMHs) are difficult to separate for nickel, cobalt, lithium, manganese, zinc and copper, says Prof Tamal Banerjee of the Department of Chemical Engineering, IIT Guwahati. “New cations and anions within new solvents such as ionic liquids have gained huge interest,” he observes, in a write-up in IIT-M TechTalk.

While the scientific world is looking at ILs with renewed interest, by all accounts, the industry is a bit circumspect. Ashish Bansal, Managing Director, Pondy Oxides & Chemicals Ltd, which is into recycling of materials and is now putting up a plant for extracting metals from lithium-ion batteries, says the use of ionic liquids as solvents for the extraction of metals from used lithium-ion batteries “is progressing well on the R&D scale. In an emailed response to quantum, Bansal observed that ILs have an “ability to selectively extract metals at a certain pH, RPM and time period in the leaching process.” Additionally, they are environment-friendly, due to their ease of disposal and restoration, and they have an inherent nature for eco-friendly recycling as compared to other leaching agents, he said.

Yet, the company is not yet ready to use ILs, because of “certain shortcomings” — mainly, the higher cost compared with conventional leaching agents. “There is a need for further commercial-scale development before the process can be profitably scaled up for the recycling of lithium-ion batteries in a sustainable manner,” Bansal said.

Recovery is key

The focus of scientific research is shifting to recycling ILs, to make them economically viable. “Numerous IL recycling techniques, such as distillation, membrane separation, ATPS, extraction and adsorption, have been introduced to recycle ILs. All these IL recovery methods have their own pros and cons,” notes a scientific paper published by a group of Singapore-based researchers.

For example, ‘membrane separation’ requires less capital investment, but the yields are low. ‘Distillation’ is effective but also energy intensive. ‘Extraction’ calls for solvents. If researchers could crack recovery of ILs, they would have a gamechanger in their hands.

SHARE

- Copy link

- Email

- Facebook

- Telegram

- LinkedIn

- WhatsApp

- Reddit

Published on August 18, 2024