India’s IT services leaders are ramping up their semiconductor design and AI-driven engineering capabilities as global chipmakers deepen their presence in the country. While analysts expect this to remain a specialised niche — centred on chip design, verification and fab-level AI systems rather than a cloud- or AI-scale breakout — the segment is steadily expanding and poised for much larger growth.

Semiconductors are the foundation of modern technology, from AI and cloud to automotive and industrial automation. Global chip shortages and the surge in demand for advanced electronics have created a strategic inflection point.

Gilroy Mathew, COO of UST, shared that semiconductors represent one of its fastest-growing verticals, alongside healthcare and the BFSI sector.

“This segment is projected to deliver double-digit growth within our ER&D portfolio over the next five years. We have been a pioneer in the semiconductor business for the last 15 years. As of today, we have a dedicated 10,000-member team serving 15 out of the top 20 semiconductor companies globally; it provides world-class engineering solutions to our clients on R&D, Hardware, Software, and ATE,” he noted.

Expertise

Globally, the company serves semiconductor companies, with expertise in design, verification and embedded systems. Domestically, India’s semiconductor mission and PLI/DLI schemes have opened new opportunities for the company, he said.

“Our recent ₹3,330 crore OSAT joint venture with Kaynes Semicon in Gujarat is aimed at strengthening India’s semiconductor value chain. This partnership enables us to provide a full ATE solution to our global clients, including OSAT. We are providing an alternative back-end manufacturing option to our clients. UST is building end-to-end capabilities, including pre-silicon design (ASIC, FPGA, SoC architecture), post silicon validation, advanced EDA automation and manufacturing process support through OSAT partnerships,” Mathew explained.

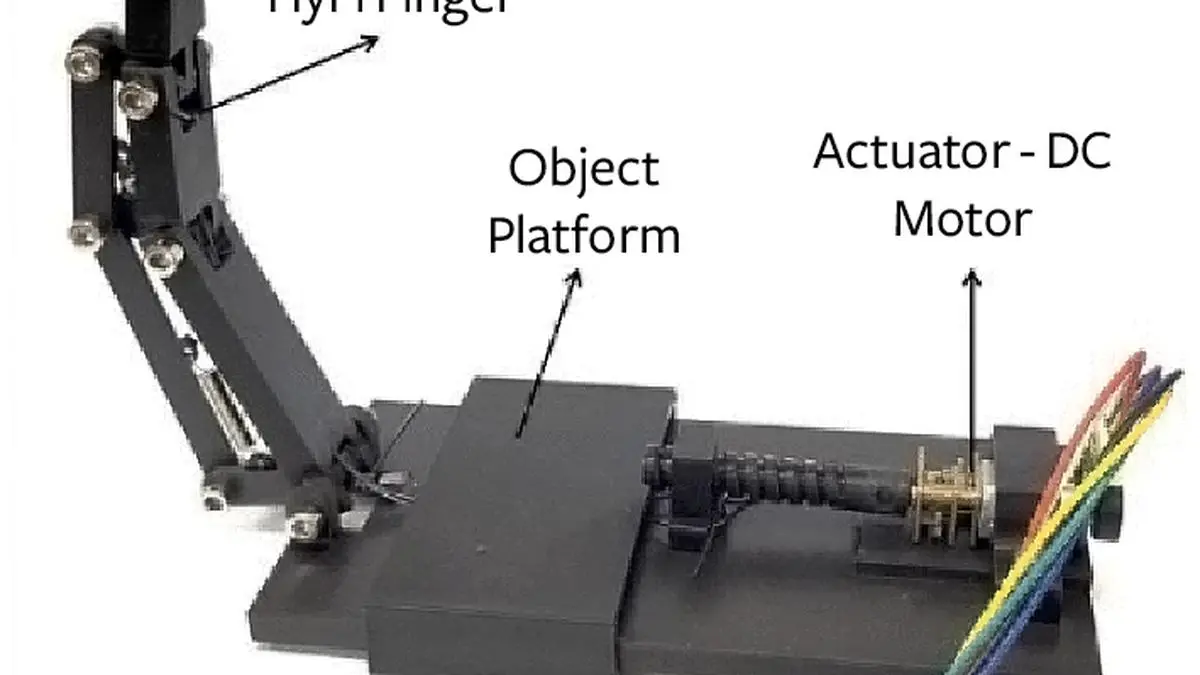

Alongside, semiconductor tools generate a vast amount of data, which can be leveraged to build efficient machine learning and AI-based predictive maintenance systems that help reduce tool downtime and improve overall fab yield.

Omprakash Subbarao, CEO at IISc’s FSID CORE Labs, highlighted that AI-driven defect inspection during wafer production and packaging will further enhance yield, benefiting both individual tools and the fab as a whole. The development of advanced digital twins of both tools and fabs will support better training for fab engineers and enable real-time remote monitoring of processes and equipment.

“This space is set to become a much larger market, and its growth is already well underway. A growing number of companies are now focusing on advanced tool development, moving beyond purely service-oriented work. Start-ups mentored by established tool makers, fabs, and VLSI design houses can accelerate innovation and strengthen the overall ecosystem far more effectively,” he said.

ISM is building a semiconductor manufacturing ecosystem that includes production, assembly, packaging, and testing at scale, which will create opportunities for IT services in enabling smart manufacturing, digital transformation and automation across semiconductor fabs and OSAT facilities. With advanced machinery, robotics, and AI-driven workflows becoming integral to these setups, technology will play a critical role in optimising operations, ensuring quality, and driving efficiency.

Biswajeet Mahapatra, Principal Analyst, Forrester, explained that while India’s IT services firms are unlikely to gain a broad competitive edge across the semiconductor value chain because core manufacturing and fabrication remain capital-intensive and dominated by global giants. However, they can carve out a niche in design, verification, and software-driven aspects of chip development, leveraging their engineering talent and experience in complex systems integration. This will likely remain a specialised play rather than a mainstream focus.

“While semiconductor design and engineering could emerge as a meaningful growth vertical, it will not match the scale of cloud or AI in the near term. The opportunity lies in high-value design services, embedded software, and verification, driven by global demand for advanced chips in AI, automotive, and IoT. Growth will depend on partnerships with semiconductor firms and building domain expertise, making it more of an adjacent vertical than a dominant pillar,” he said.

Revenue potential will be modest compared to cloud or AI, likely in the low single-digit percentage of overall IT services revenue over the next 3–5 years. For large players, this could translate into hundreds of millions of dollars rather than billions, driven by specialized design and verification projects for global semiconductor clients.

Published on November 16, 2025